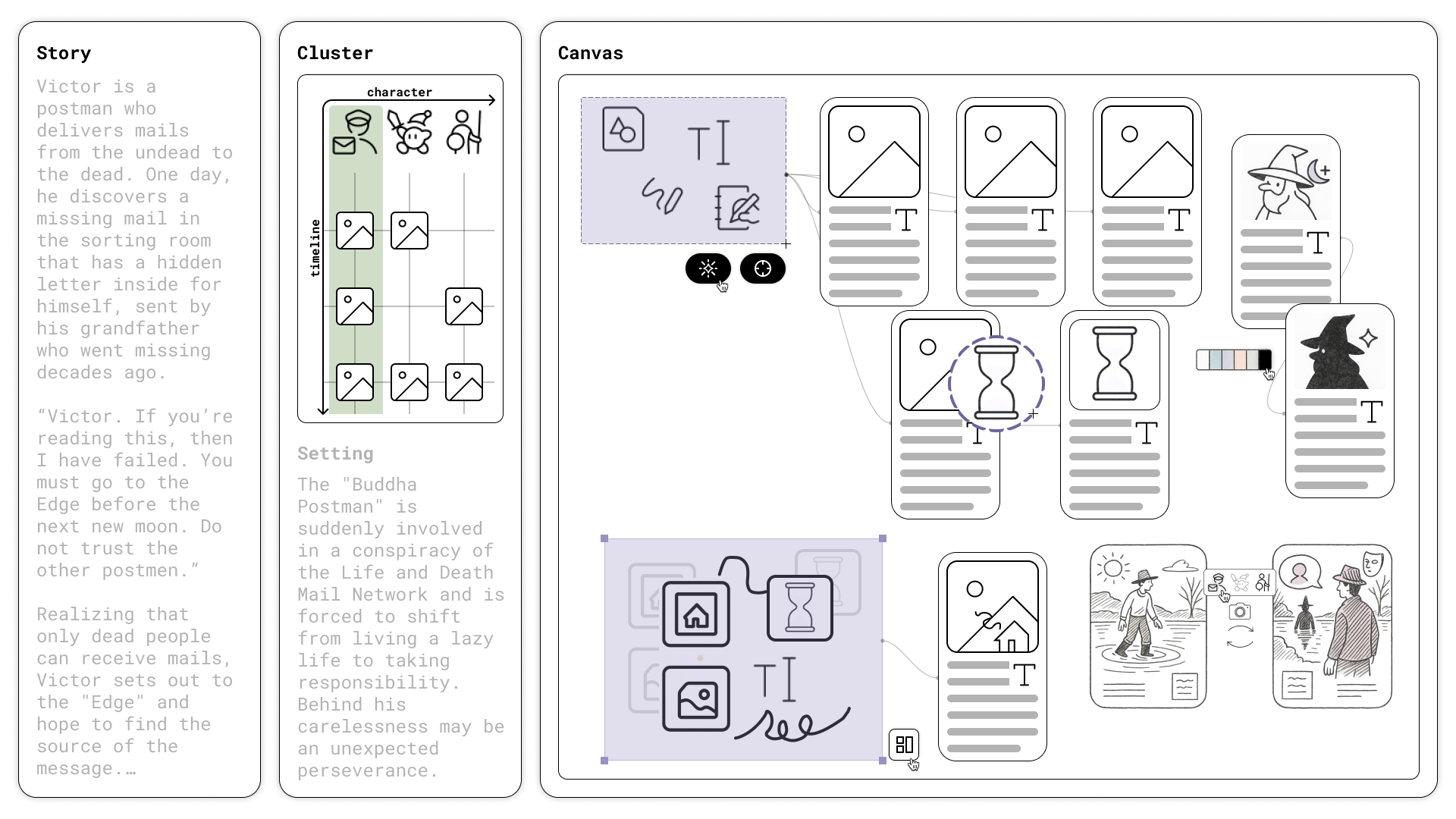

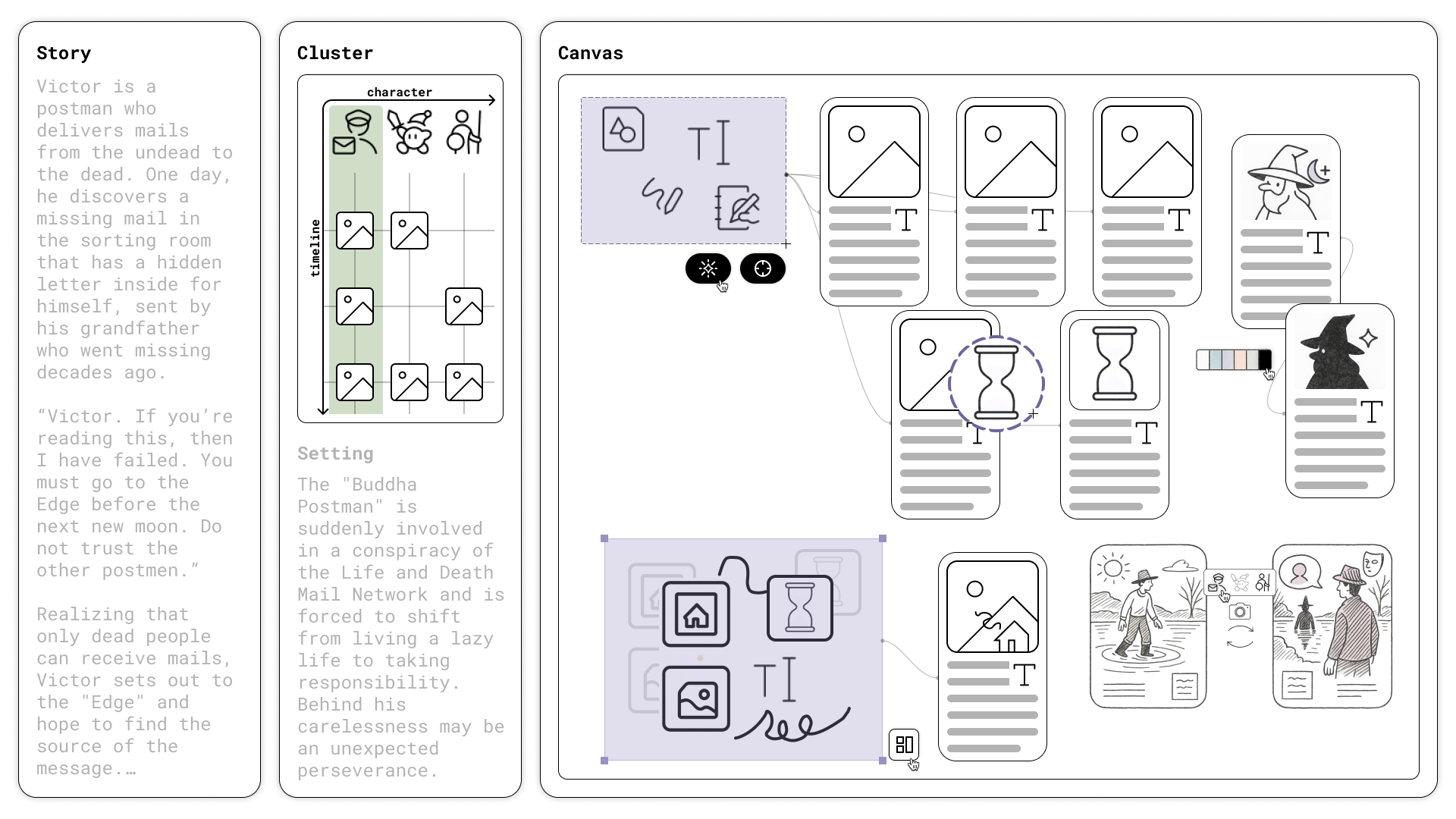

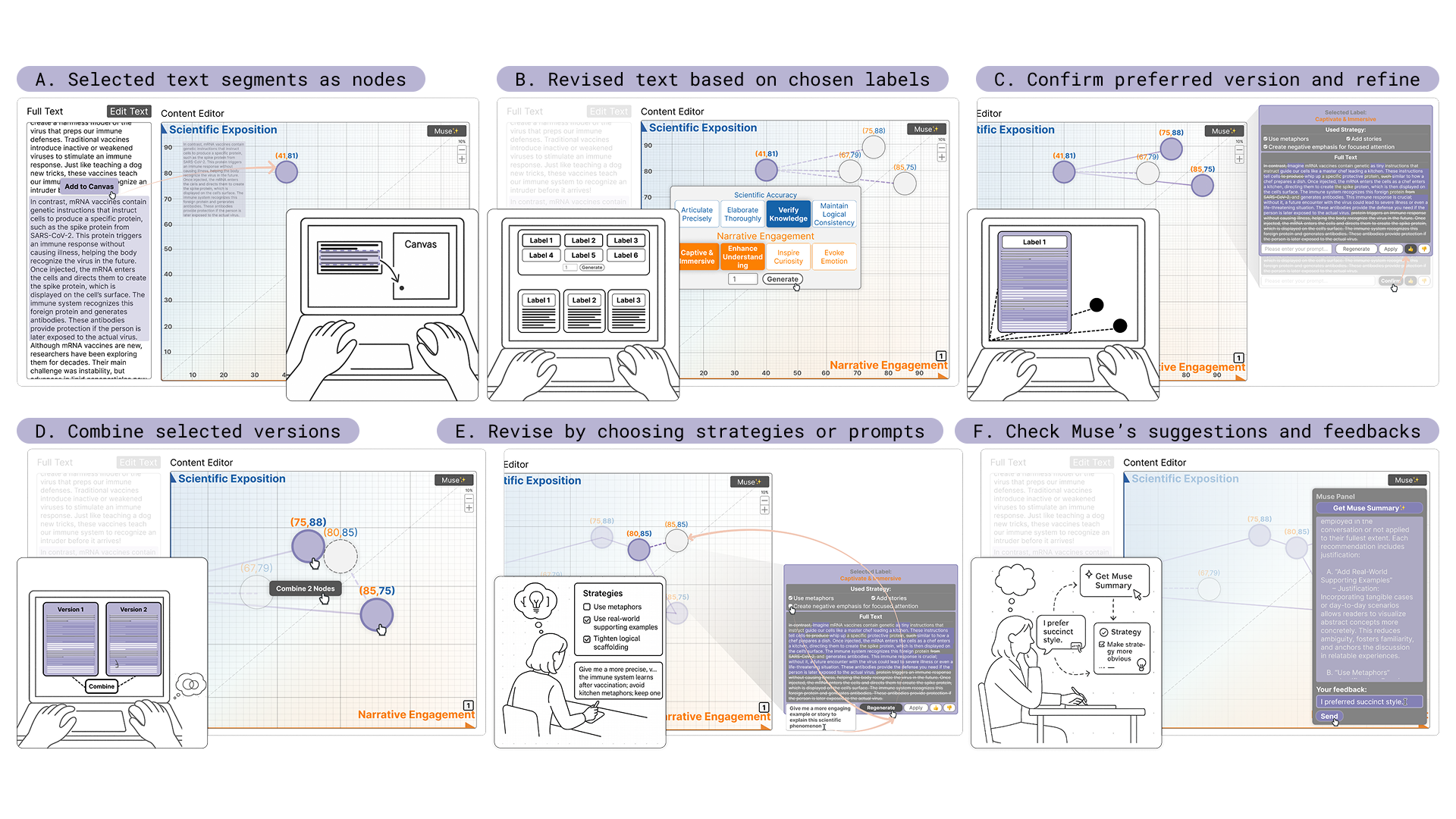

Vistoria: A Multimodal System to Support Fictional Story Writing through Instrumental Text‑Image Co‑Editing

Kexue Fu*, Jingfei Huang*, Long Ling*, Sumin Hong, Yihang Zuo, Ray LC, Toby Jia-Jun Li†.

Hi, I’m Jingfei Huang, a Master of Design Engineering graduate from Harvard University (GSD/SEAS). I have worked with Prof. Jose Luis Garcia del Castillo López and Prof. Alex Haridis from Harvard University, collaborated with Lock Liu at Tencent, and conducted research with Prof. Ray LC at City University of Hong Kong, Prof. Toby Jia-Jun Li at the University of Notre Dame, and Prof. Jiangtao Gong at Tsinghua University.

My research interests focus on human–AI interaction for spatial and multimodal computing—designing interfaces and agent workflows that turn urban/visual data into interpretable, controllable support for navigation, creative writing/visualization, and decision-making.

Kexue Fu*, Jingfei Huang*, Long Ling*, Sumin Hong, Yihang Zuo, Ray LC, Toby Jia-Jun Li†.

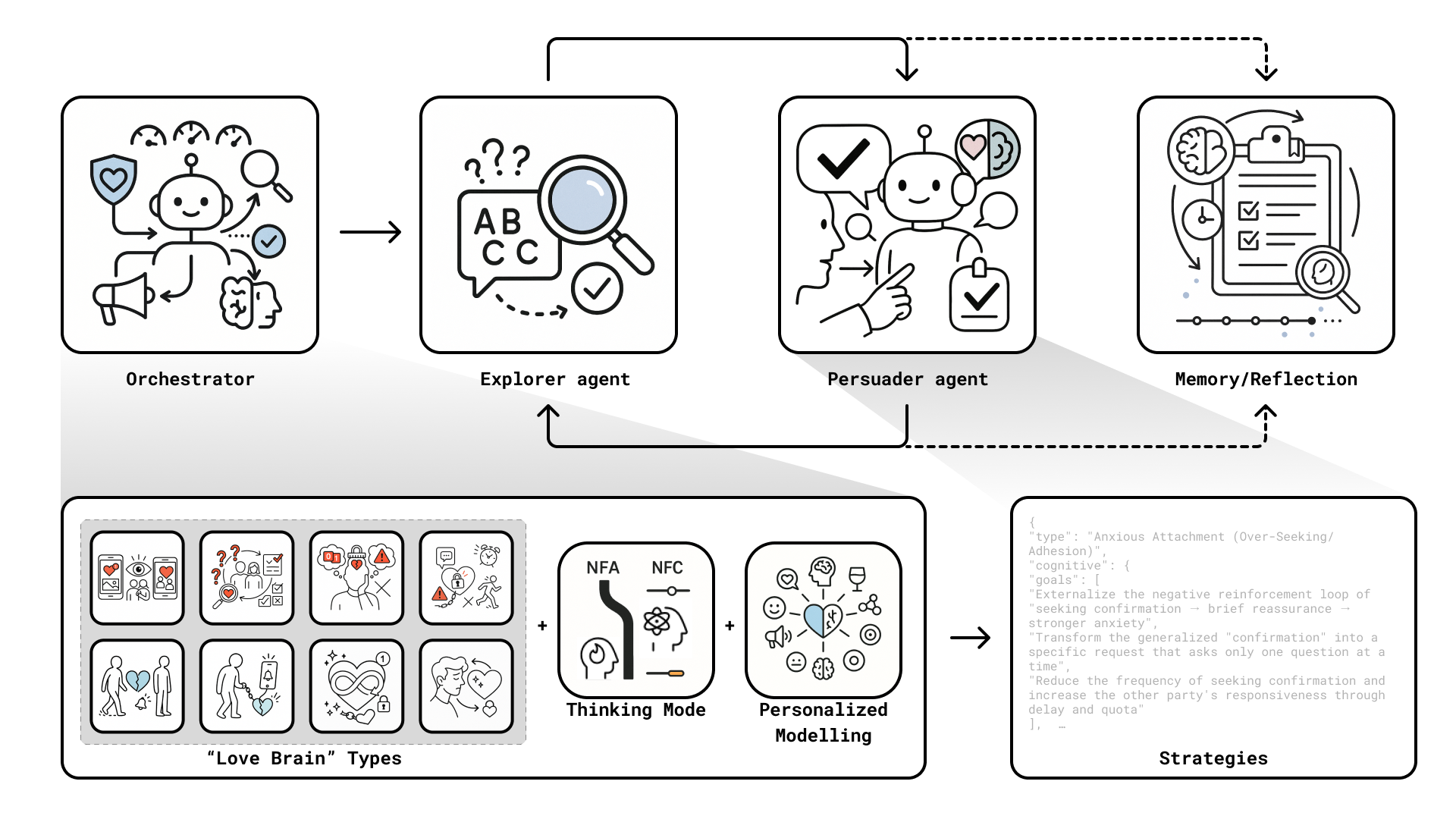

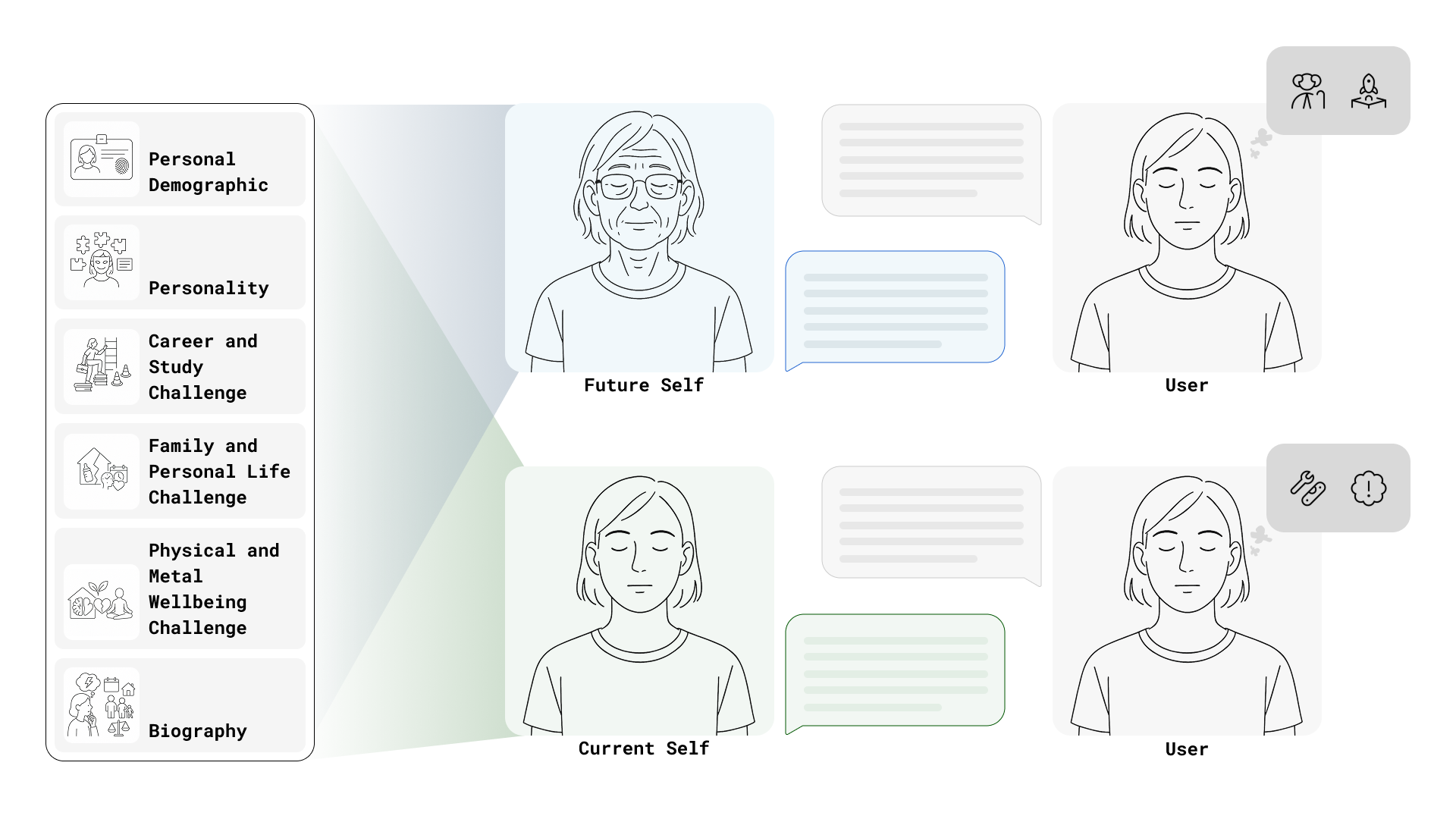

Jingfei Huang*, Hengxu Li*, Tianxin Huang*, Yuanrong Tang, Jiangtao Gong†.

Jingfei Huang*, Yuyao Wang*, Ruyan Chen*, Ray LC†

Kexue Fu*, Jiaye Leng*, Yawen Zhang*, Jingfei Huang, Yihang Zuo, Runze Cai, Zijian Ding, Ray LC, Shengdong Zhao, Qinyuan Lei †

Jingfei Huang*, Xiaole Zeng*, Xinyi Chen*, Ray LC†

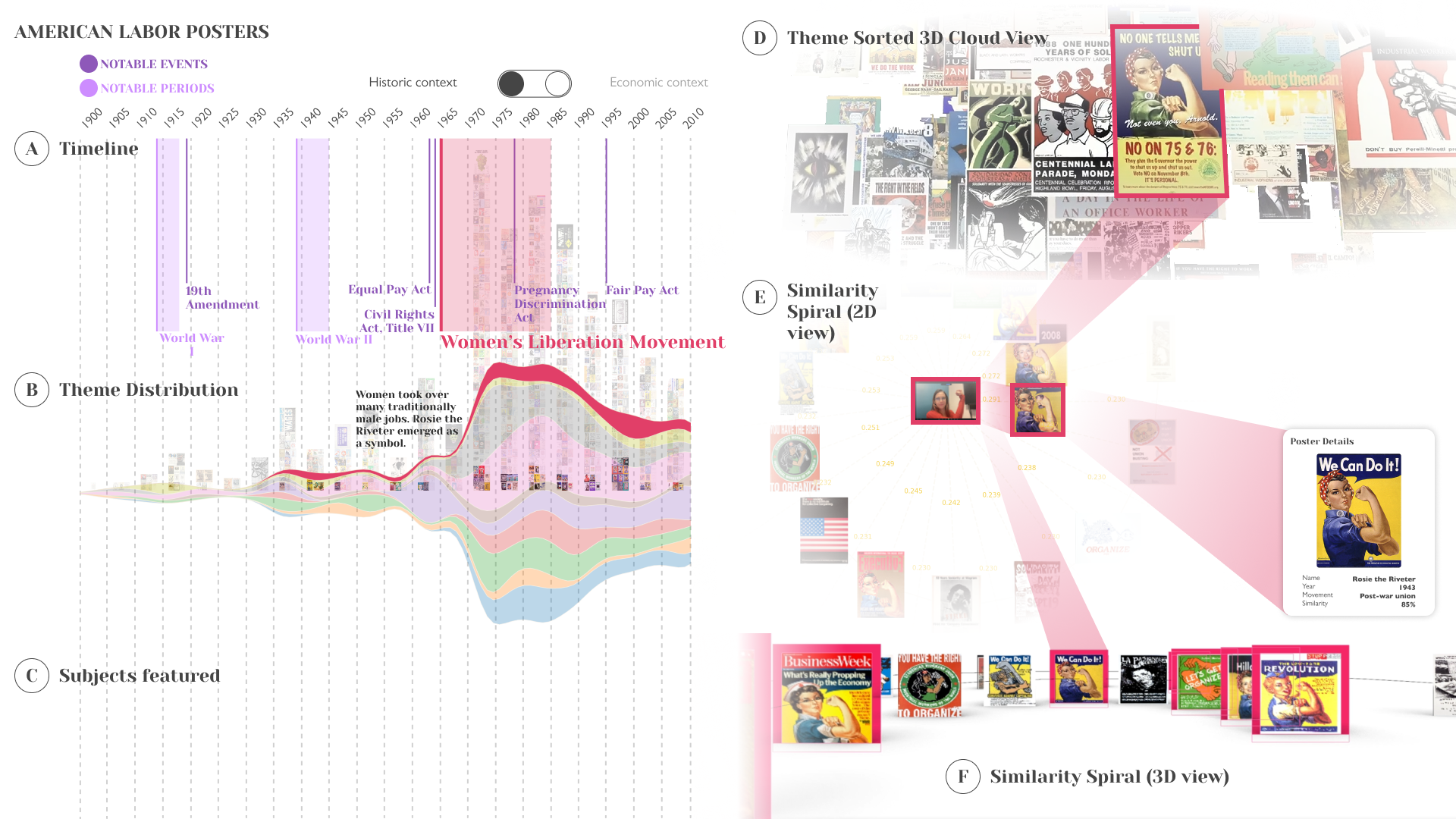

Linh Pham*, Daniel Rodriguez Rodriguez*, Jingfei Huang*, Hui-Ying Suk*

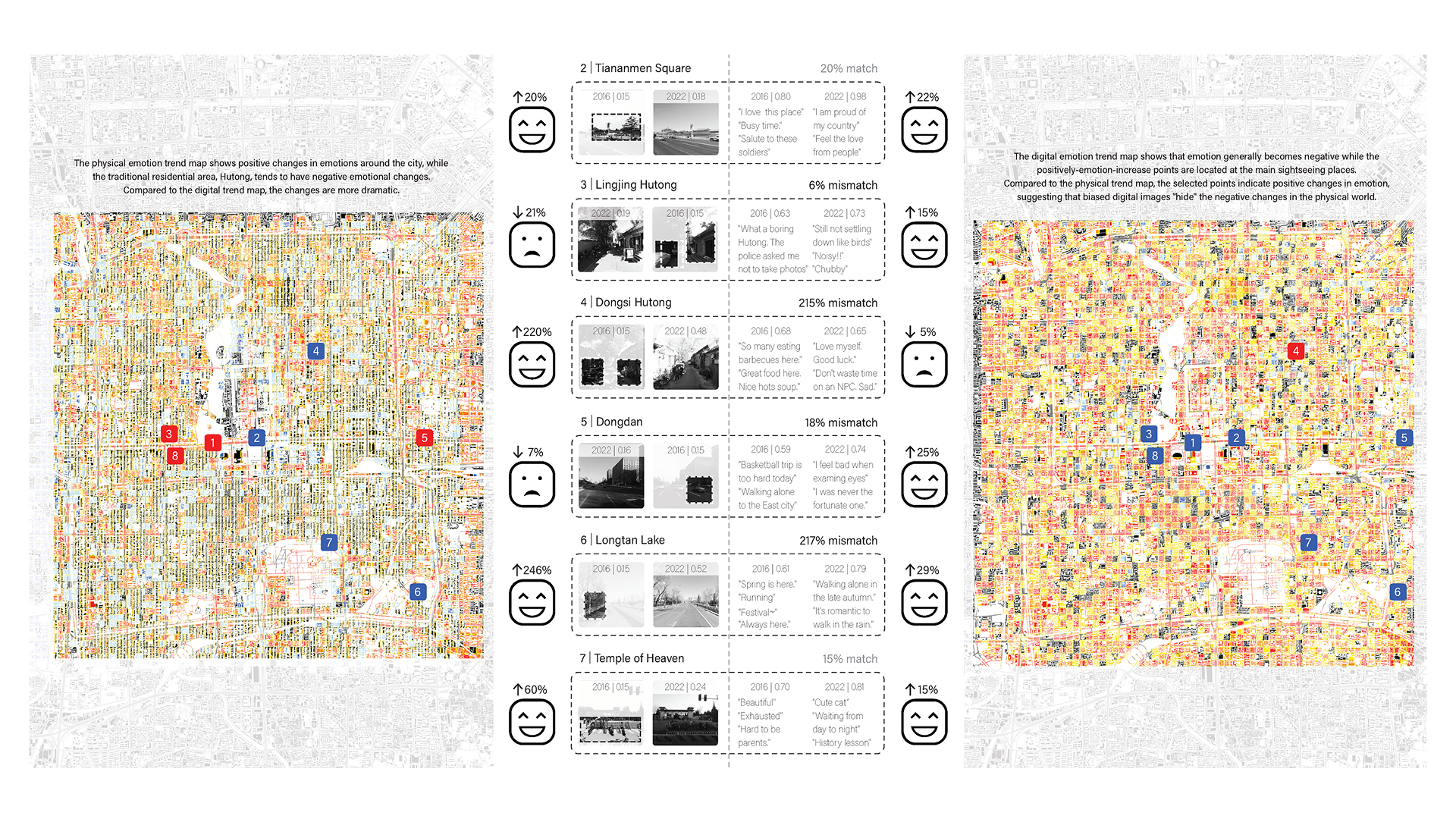

Jingfei Huang, Yan Zhang, Jingyi Ge, Yaning Li, Ray LC†

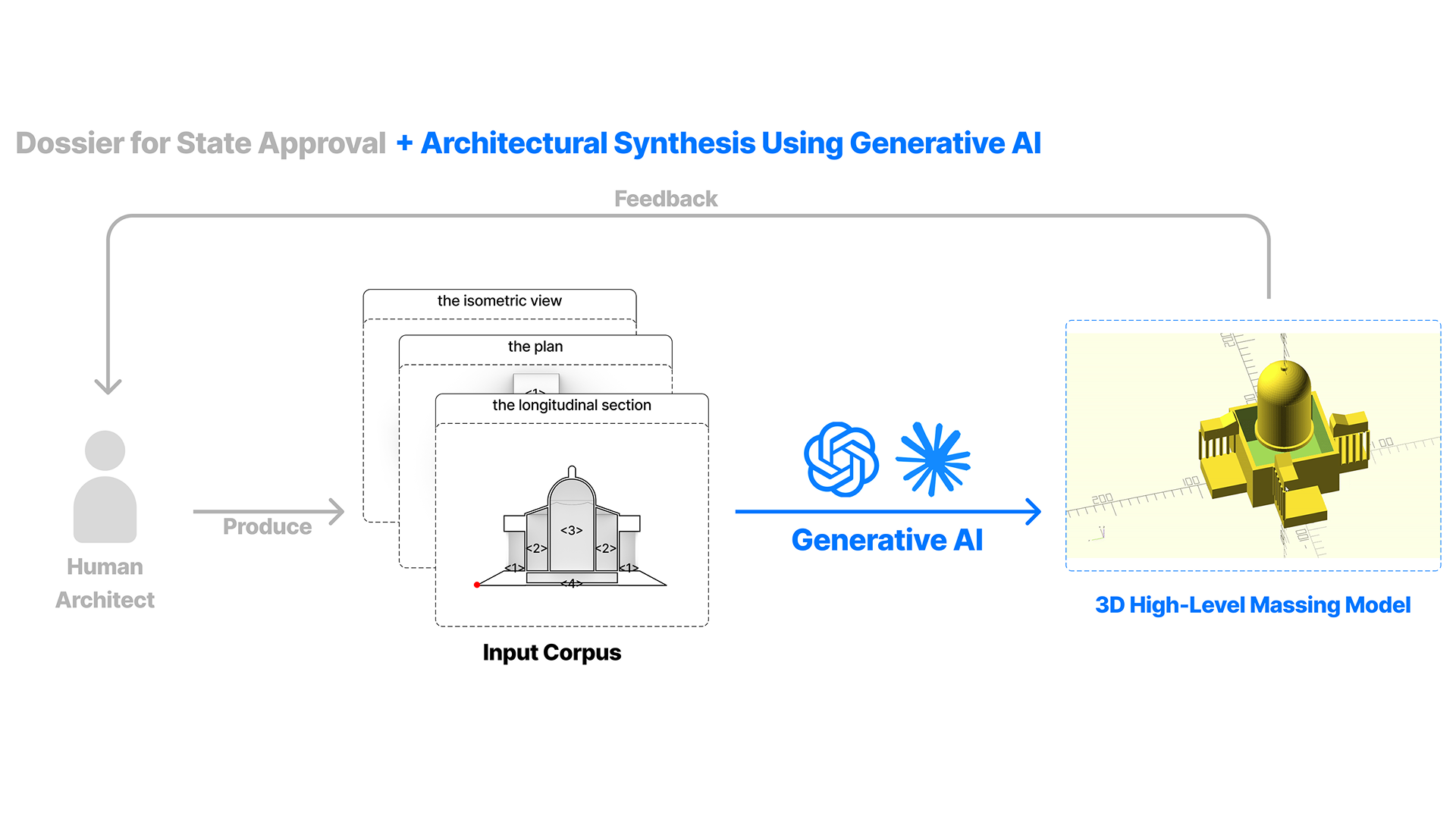

Jingfei Huang, Alexandro Haridis†

Jingfei Huang, Han Tu†

Design, develop, and deploy a map app that calculate multi-modal, agentic personalization of urban walking routes, foregrounding experiential subjective signals to tailor spatial navigation beyond shortest path.

Advisor: Jose Luis Garcia del Castillo Lopez.

Designed and developed a multi-agent retrieval-and-tool-use pipeline that decomposes design reference search into sub-tasks with verification/strategy adaptation for robust synthesis for designers.

Collaborated with Tencent; Advisor: Lock Liu.

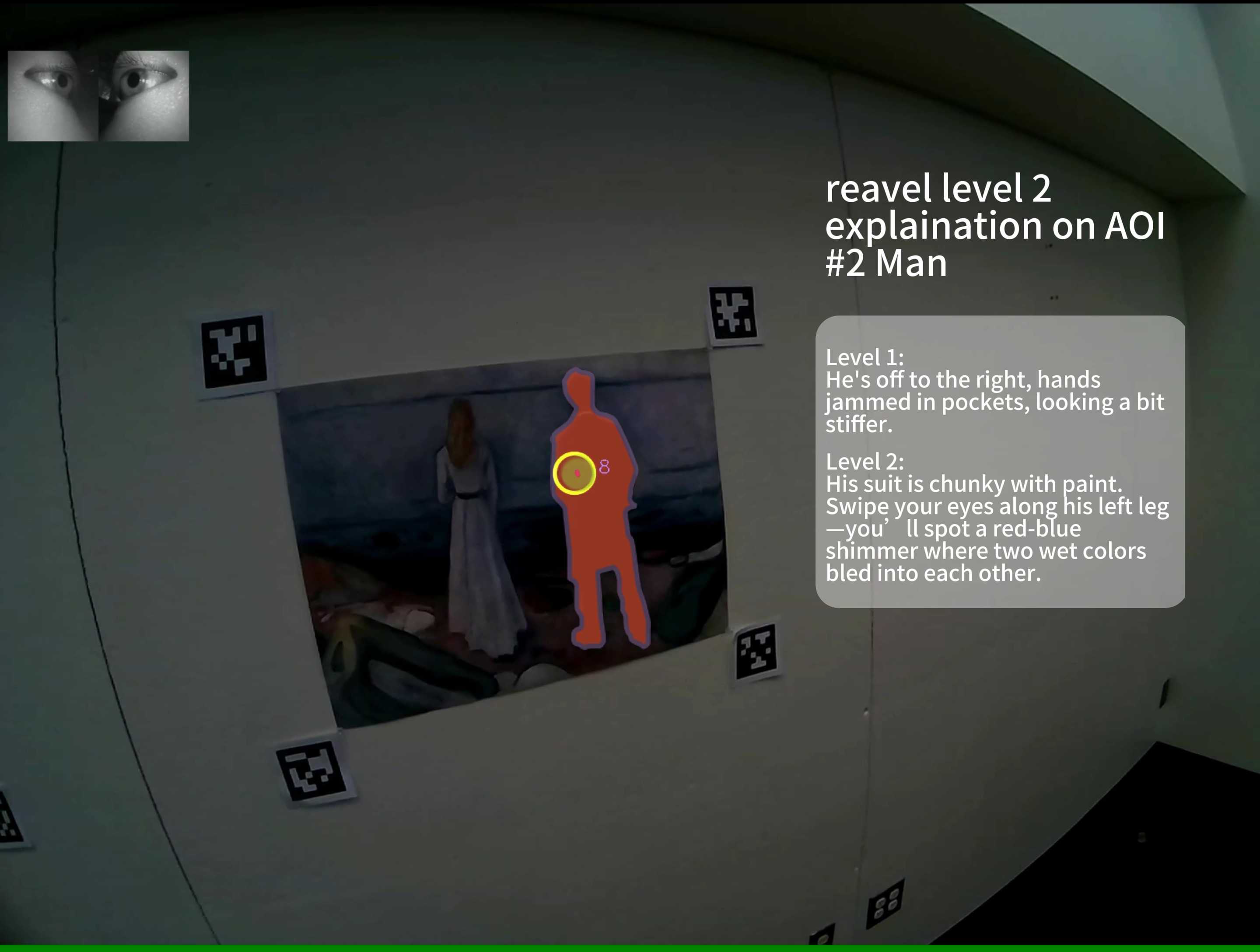

Design and prototype an eye-gaze based audio guide system for artwork viewing.

Advisor: Juan Pablo Ugarte & Martin Bechthold.

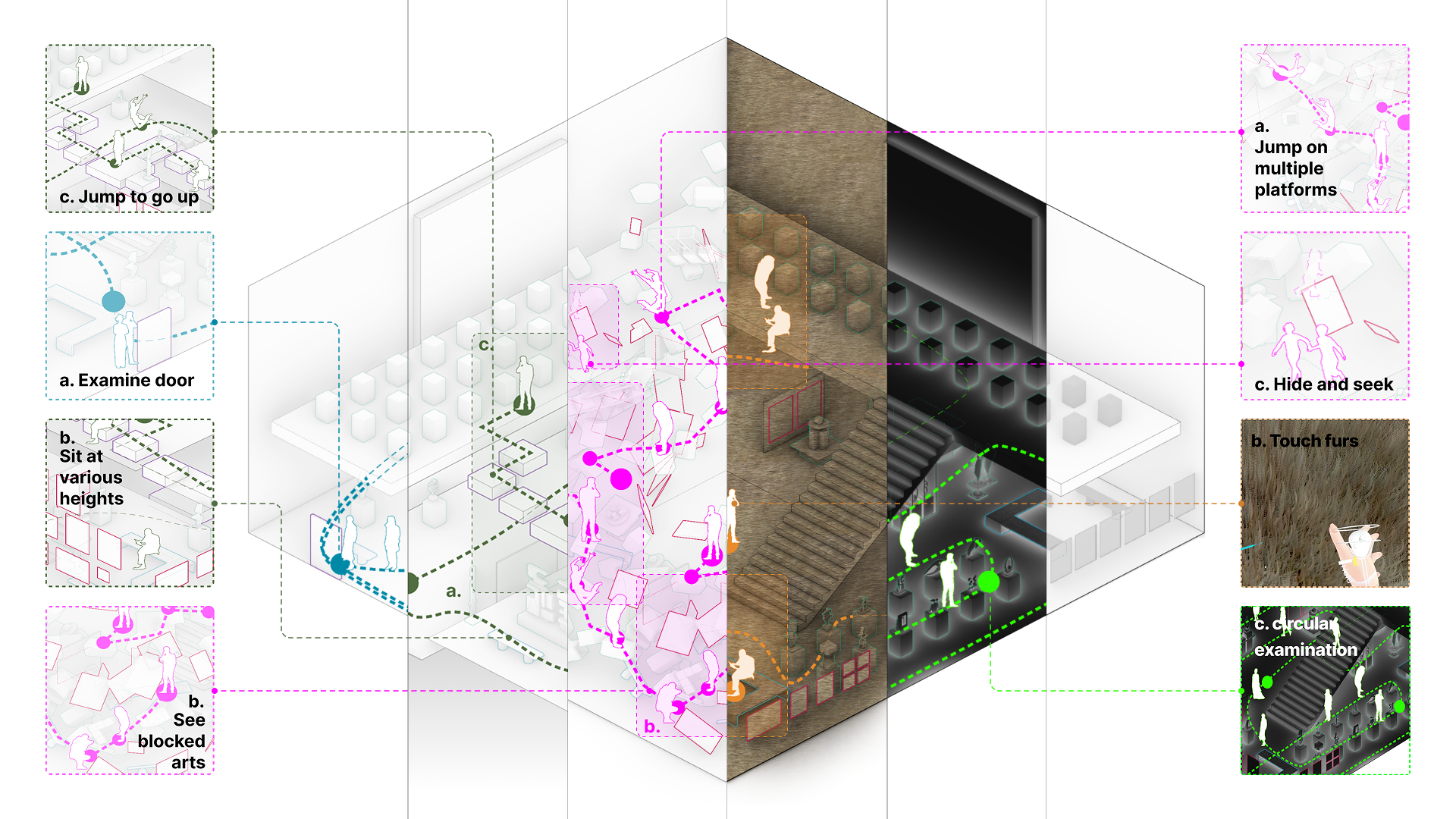

Designed and developed a horror game prototype (0→1), built the narrative atmosphere and mechanics of each level, and produced the PV.

Collaborated with Tencent Studio TIMI.

Designed, developed, deployed the software part that embedded BERT model, displayed sentiment calculation and simulated three-body social interactions among users.

Exhibited at SKF/Konstnärshuset.

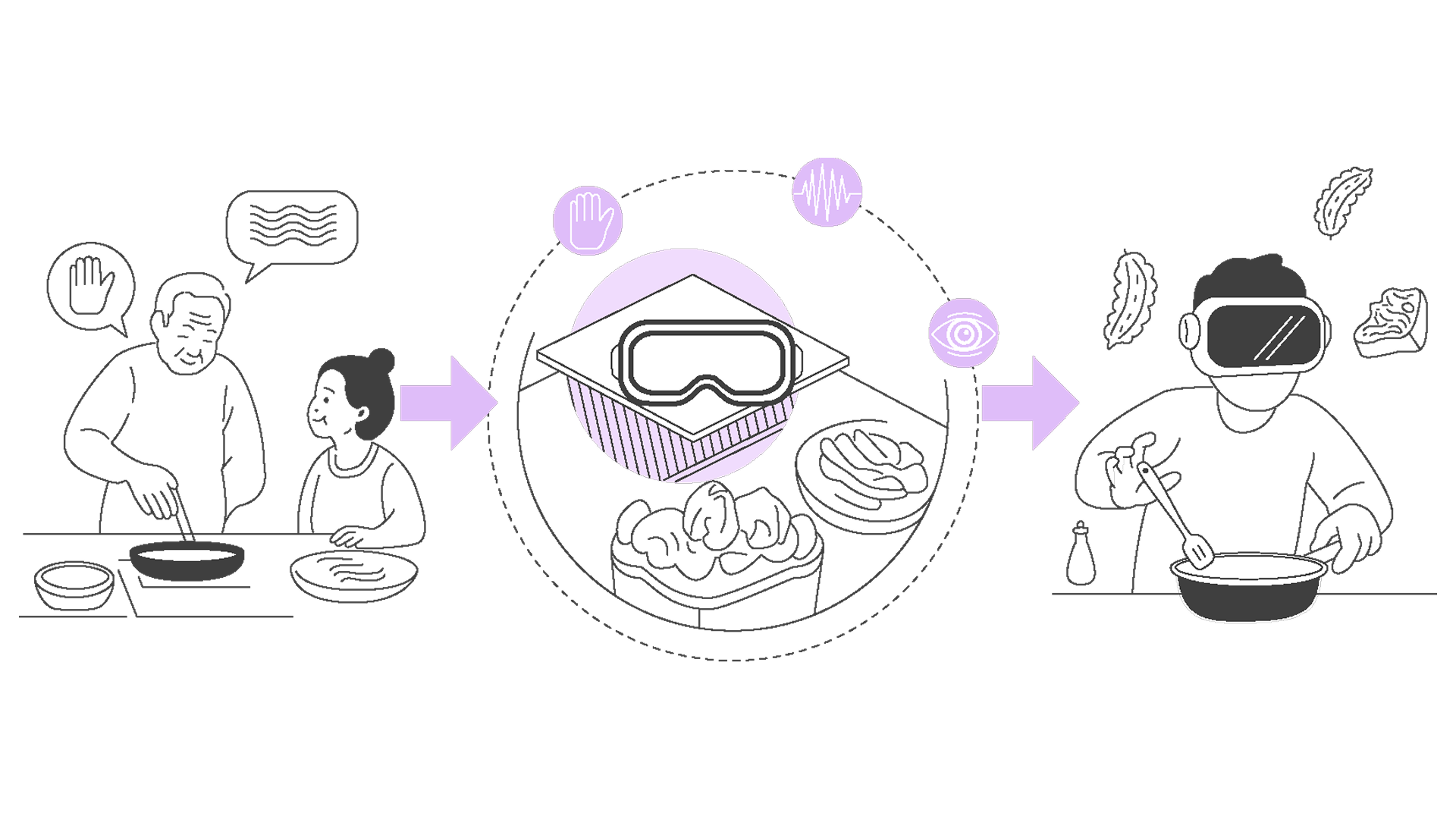

Beesper is a VR application designed to teach American Sign Language (ASL) in an interactive and engaging manner. It provides real-time feedback, making the learning process enjoyable and effective.

Winner of Best Use of Hand Gesture in 2024 MIT Reality Hack.

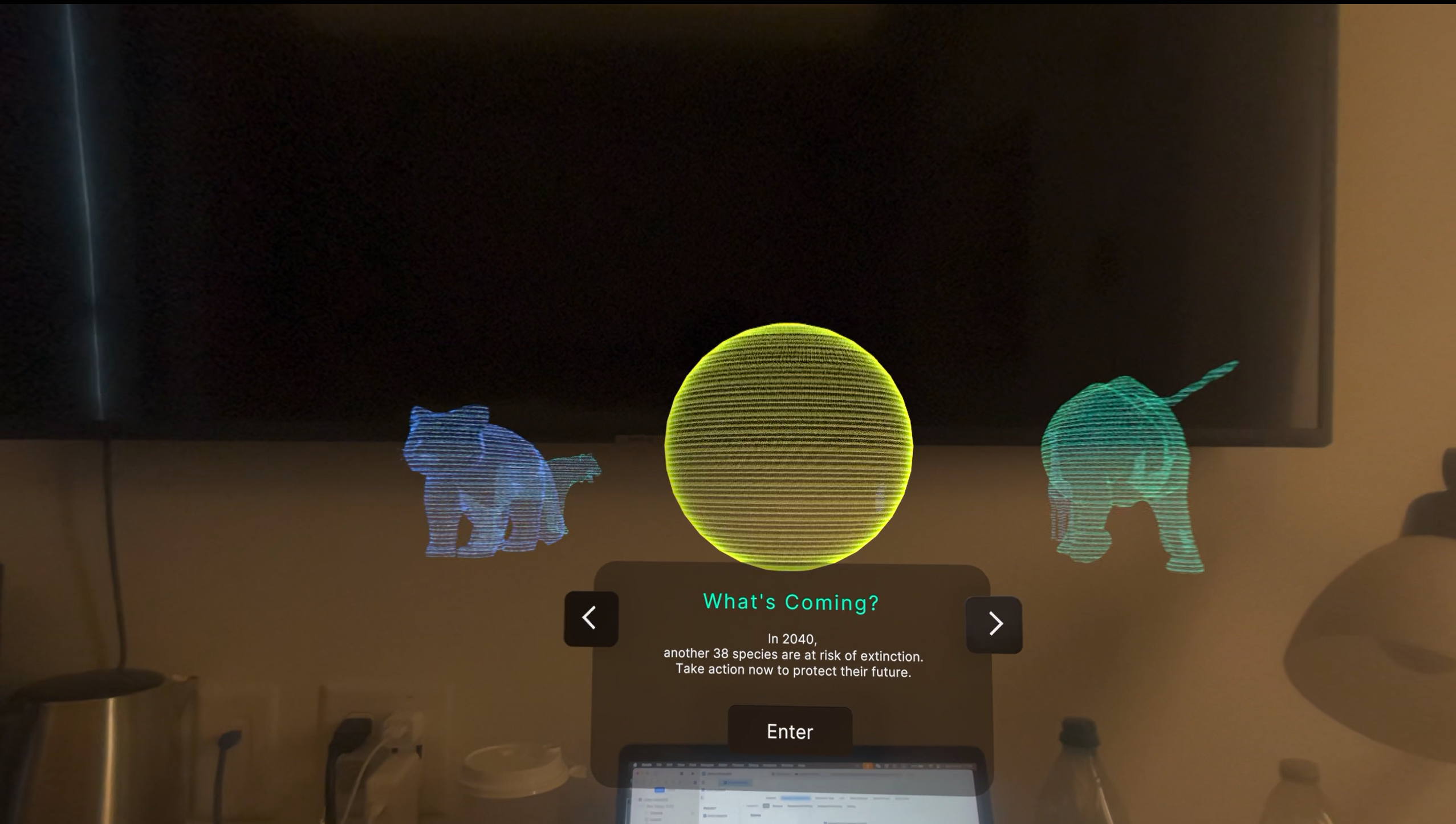

By combining XR and VR, the project immerses users in the lives of these animals, helping them experience the impact of environmental degradation, habitat loss, and human activities from a first-hand perspective.

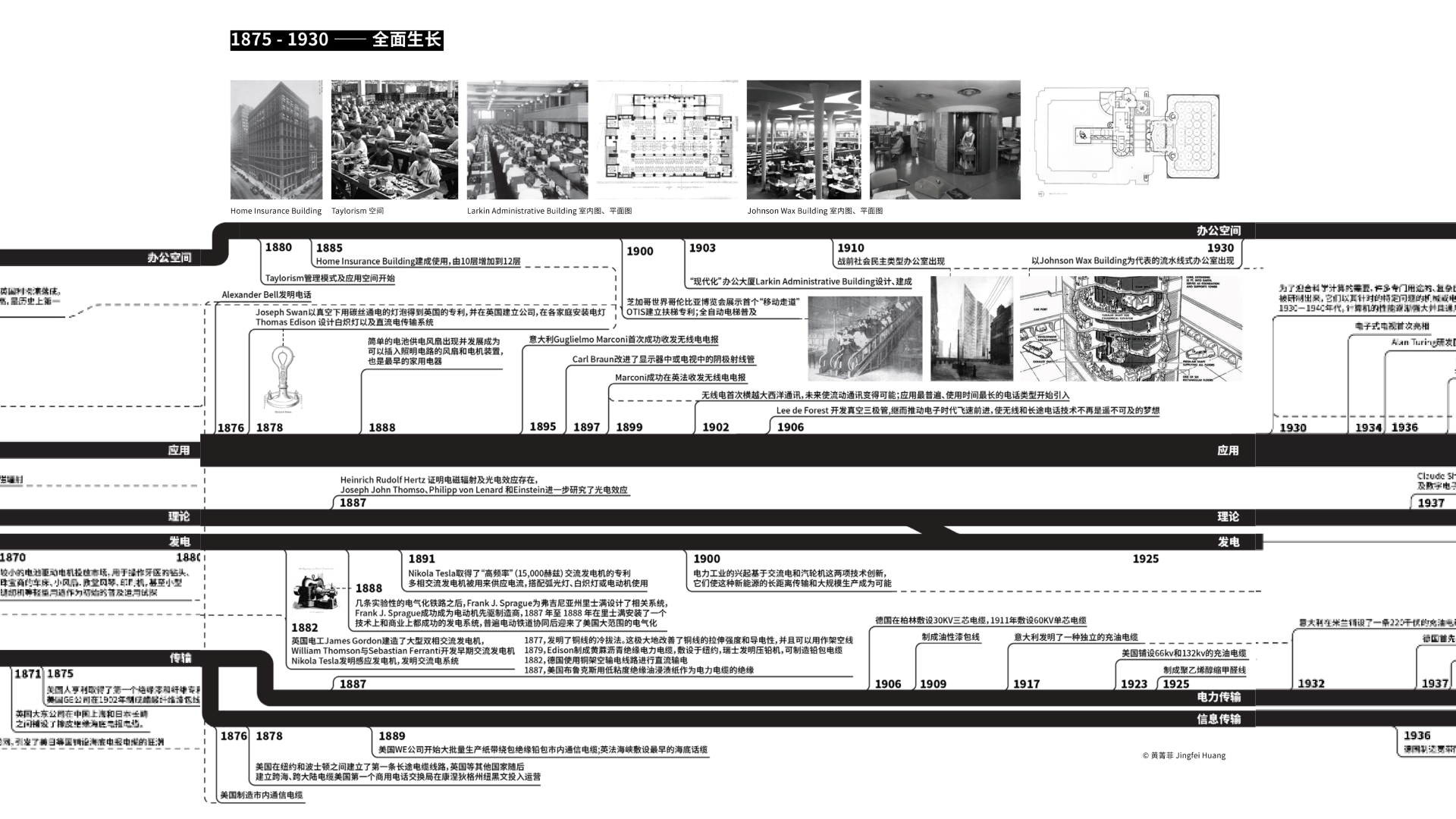

Conducted technology philosophy research on electricity, focusing on the relationship between digital interfaces and human interactions across various scales. Published privately.

Advisor: Jia Weng.